Yaw aided Radar Inertial Odometry using Manhattan World Assumptions

Christopher Doer and Gert F. Trommer

28th Saint Petersburg International Conference on Integrated Navigation Systems (ICINS), 2021

[Paper] [Code] [Datasets] [Video_1] [Video_2]

Abstract

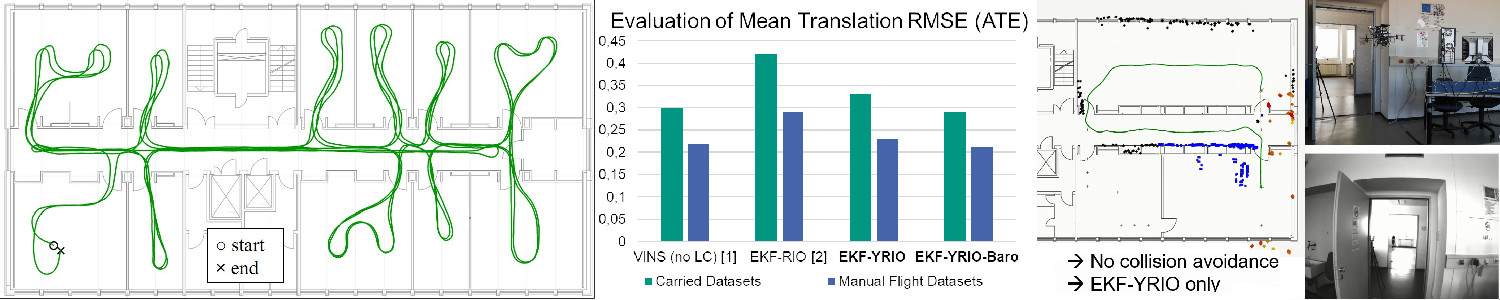

Accurate localization is a key component for autonomous robotics and various navigation tasks. Navigation in GNSS denied and visually degraded environments is still very challenging. Approaches based on visual sensors tend to fail in poor visual conditions such as darkness, fog or smoke. Therefore, our approach fuses inertial data with FMCW radar scans which are not affected by such conditions. We extend a filter based approach to 3D Radar Inertial Odometry with yaw aiding using Manhattan world assumptions for indoor environments. Once initialized, our approach enables instantaneous yaw aiding using only a single radar scan. The proposed system is evaluated in various indoor datasets with carried and drone datasets. Our approach achieves similar accuracies as state of the art Visual Inertial Odometry (VIO) while being able to cope with degraded visual conditions and requiring only very little computational resources. We achieve run-times many times faster than real-time even on a small embedded computer. This approach is well suited for online navigation of drones as demonstrated in indoor flight experiments.

Cite

@INPROCEEDINGS{DoerICINS2021,

author={Doer, Christopher and Trommer, Gert F.},

booktitle={2021 28th Saint Petersburg International Conference on Integrated Navigation Systems (ICINS)},

title={Yaw aided Radar Inertial Odometry uisng Manhattan World Assumptions},

year={2021}